If two identical hash keys are identified, it means.

Using a patent-pending tape/file-formataware parser, data blocks are broken into sub-blocks and assigned an identification key (index), calculated using a cryptographic hash function. Post-process deduplication and compression are supported with EN, ST, and LS editions of SANsymphony. Dynamic Solutions International data deduplication solutions provide sub-file or block-based deduplication. Post-processing allows capacity optimization to be scheduled at non-peak hours, thus minimizing the impact on IOPS during peak hours. Software-based deduplication is typically less expensive to deploy than dedicated hardware and should require no significant changes to the physical network. These hashes are stored in the random-access memory of the deduplication storage environment. In the case of file-based deduplication, file hashes are compared and in the case of block-based deduplication, the hashes of each block are compared. It must be noted that the initial capacity allocation on the target device is larger with post-processing as the raw data is first stored as-is before undergoing data reduction. So, the approach is to mathematically compute hash values for each file and blocks. The deduplicated and compressed data is written back to the storage device which now occupies less capacity than before. Then, the stored data is scanned and analyzed for optimization opportunities. SANsymphony first stores the raw data in the target storage device. Post-process deduplication and compression: Here, data reduction happens after the data is written to disk. Global Deduplication can reach petascale using only a relatively tiny in-memory index. By default SHA256 is used but this can be changed via an environment variable. Duplicate comparison uses cryptographic hash based matching to detect duplicate blocks. Inline deduplication and compression are supported only with EN edition of SANsymphony and can be enabled individually or together (either deduplication, compression, or both) as needed. Global Deduplication: Scalable, fast Content-aware Deduplication across the entire dataset. When there are frequent backup operations carried out and redundant data generated is high, inline processing would be a recommended approach as it cuts down the data size before storing the backup.

HASH BASED FILE DEDUPLICATION SOFTWARE PDF

Inline processing reduces disk capacity requirements as data is deduplicated and compressed before it gets stored. In the latest post (Deduplication: Why Computers See Differences in Files that Look Alike) in his excellent Ball in your Court blog, Craig states that Most people regard a Word document file, a PDF or TIFF image made from the document file, a printout of the file and a scan of the printout as being essentially the same thing. SANsymphony scans and analyzes the incoming data for potential optimization opportunities and performs deduplication and compression. The most recent version of the RDS 2.49 (as of October 2015) contains more than 42 million hash values, for over 150 million files.

HASH BASED FILE DEDUPLICATION SOFTWARE SOFTWARE

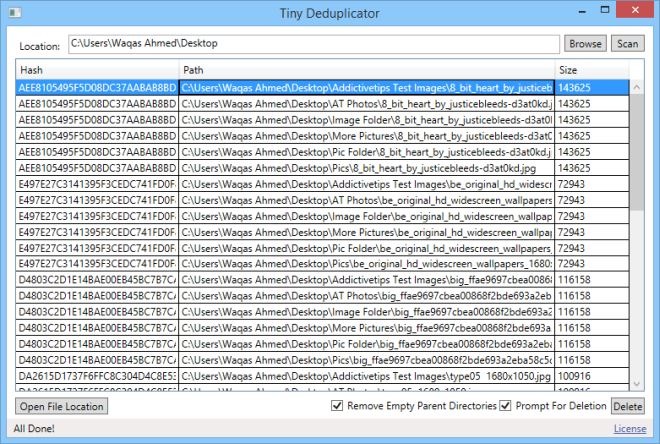

Inline deduplication and compression: Here, data reduction happens before the data is written to disk. It uses default software installations of operating systems and end user software to derive a list of hash values on a file basis. You can choose the appropriate approach based on your business and IT requirements. Experimental analysis shows that the proposed strategy, reduces memory utilization of hash value and improves data read/write performance.DataCore SANsymphony offers two approaches to performing deduplication and compression. If there are any dups based on size + hash, flag them, and let the analyst decide to delete them. In this paper, an efficient Distributed Storage Hash Algorithm (DSHA) has been proposed to lessen the memory space occupied by the hash value which is utilized to identify and discard redundant data in cloud. Run through the entire dir using readdir(), and get each file name (although you wont have duplicate names), size and MD5 hash. So, an additional memory space is used to store this hash value.

These algorithms produce fixed length of 128 bit or 160 bit as output respectively in order to identify the presence of duplication. Existing systems generate the hash value by using any kind of cryptographic hash algorithms such as MD5 or Secure hash algorithms to implement the De-duplication approach. Data Deduplication is an efficient approach in cloud storage environment that utilizes different techniques to deal with duplicate data. Duplicate is unavoidable while handling huge volume of data. These are categorized based on how the bulk data are handled before the indexing or hashing (Harnik et al., 2019, Lin et al., 2018, Zhou et al., 2018).

Increasing volume of digital data in cloud storage demands more storage space and efficient technique to handle these data. Data storage can be categorized into file, block, or byte levels based on the amount of data being chunked at the initial stages of the deduplication. Lecture Notes on Data Engineering and Communications Technologies

0 kommentar(er)

0 kommentar(er)